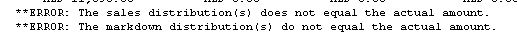

I received an email from one of my users seeking my help in clearing this issue. While posting an invoice, this error message popped up:

Upon running the Edit List for this invoice, I realized that it was due to an imbalance in Markdown Amount and corresponding distribution line. Below was the error report that I got:

There seemed to be Markdown entered on one or more line item(s), but there was no distribution line for that amount. But the issue was not THAT simple and didn’t stop there.

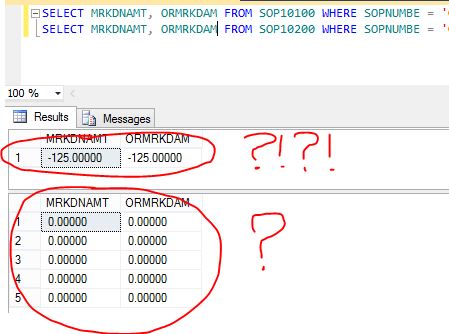

When I ran thru’ all line items, none contained a Markdown. Now that’s the problem. After some minutes of thinking, I realized something must be stranded on header record’s Markdown field, for which GP requires a Markdown distribution line, but since line items do not contain any Markdown, it’s not creating one. Strange.

I decided to query the records from SOP10100 (SOP Header) and SOP10200 (SOP Line) to understand the issue. Below is what I found:

SERIOUSLY…?!?!?!?!

But yes, that was the issue. And most baffling thing is, when I tried to reconcile this sales document, this major mishap didn’t get cleared at all.

Obvious solution for this abnormal situation is as follows:

1. Take backup of this SOP10100 record.

2. Update Markdown fields with ZERO.

3. Reconcile this sales document again to see if the above update had caused any imbalance.

Happy new year and happy troubleshooting…!!!

VAIDY